Attempting to implement a Continuous Deployment workflow whilst still having fun can be tricky. Even more so if you want to use reasonably new tech, and even more if you want to use free tech!

If you’re not planning on using one of the cloud CI solutions then you’re probably (hopefully) looking at something like TeamCity, Jenkins, or CruiseControl.Net. I went with TeamCity after having played with CruiseControl.Net and not liked it too much and having never heard of Jenkins until a few weeks ago.. ahem..

So; my intended ideal workflow would be along the lines of:

- change some code

- commit to local git

- push to remote repo

- TeamCity picks it up, builds, runs tests, etc (combining and minifing static files – but that’s for another blog post)

- creates a nuget package

- deploys to a private nuget repo

- subscribed endpoint servers pick up/are informed of the updated package

- endpoint servers install the updated package

Here’s where I’ve got with that so far:

1) On the development machine

a. Within your VisualStudio project ensure the bin directory is included

I need the compiled dlls to be included in my nuget package, so I’m doing this since I’m using the csproj file as the package definition file for nuget instead of a nuspec file.

Depending on your VCS IDE integration, this might have nasty implications which are currently out of scope of my proof of concept (e.g. including the bin dir in the IDE causes the DLLs to be checked into your VCS – ouch!). I’m sure there are better ways of doing this, I just don’t know them yet. If you do, please leave a comment!

In case you don’t already know how to include stuff into your Visual Studio project (hey, I’m not one to judge! There’s plenty of basic stuff I still don’t know about my IDE!):

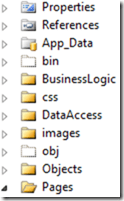

i. The bin dir not included:

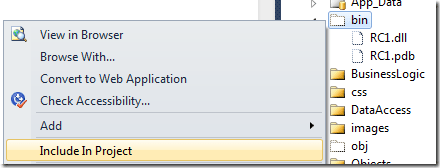

ii. Click the “don’t lie to me“ button*:

![]()

iii. Select “include in project”:

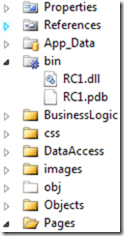

iv. The bin dir is now included:

b. Configure source control

Set up your code directory to use something that TeamCity can hook into (I’ve used SVN and Git successfully so far)

That’s about it.

2) On the build server

a. Install TeamCity

i. Try to follow the instructions on the TeamCity wiki

b. Install the NuGet package manager TeamCity add in

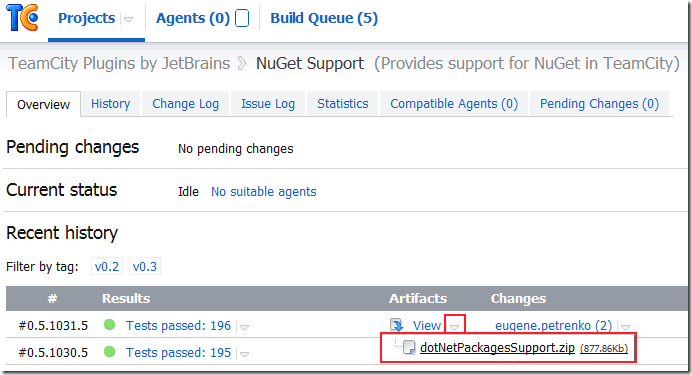

i. Head to the JetBrains TeamCity public repo

ii. Log in as Guest

iii. Select the zip artifact from either the latest tag (or if you’re feeling cheeky the latest build):

iv. Save it into your own TeamCity’s plugins folder

v. Restart your TeamCity instance

(The next couple of steps are taken from Hadi Hariri’s blog post over at JetBrains which I followed to get this working for me)

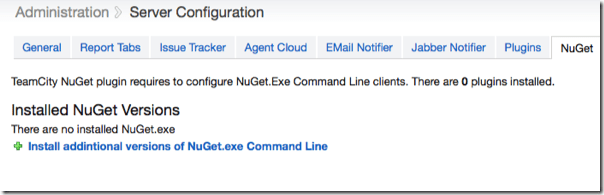

vi. Click on Administration | Server Configuration. If the plug-in installed correctly, you should now have a new Tab called NuGet

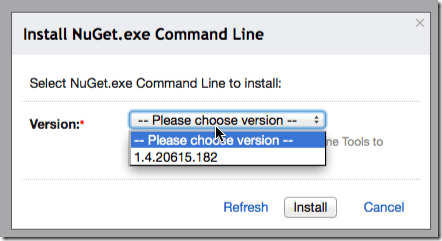

vii. Click on the “Install additional versions of the NuGet.exe Command Line”. TeamCity will read from the feed and display available versions to you in the dialog box. Select the version you want and click Install

c. Configure TeamCity

i. Set it up to monitor the correct branch

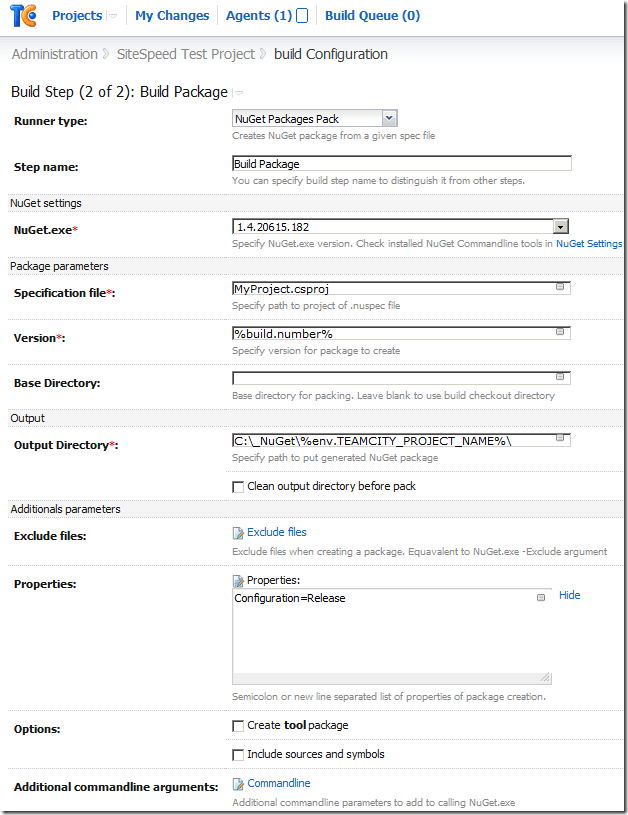

ii. Create a nuget package as a build step, setting the output directory to a location that can be accessed from your web server; I went for a local folder that had been configured for sharing:

In addition to this, there is a great blog post about setting your nuget package as a downloadable artifact from your build, which I’m currently adapting this solution to use; I’m getting stuck with the Publish step though, since I only want to publish to a private feed. Hmm. Next blog post, perhaps.

3) On the web server (or “management” server)

a. Install NuGet for the command line

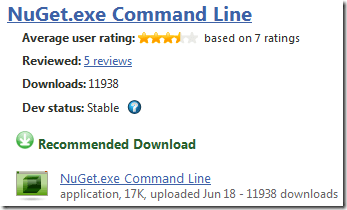

i. Head over to http://nuget.codeplex.com/releases

ii. Select the command line download:

iii. Save it somewhere on the server and add it to your %PATH% if you like

b. Configure installation of the nuget package

i. Get the package onto the server it needs to be installed on

Using Nuget, install the package from the TeamCity package output directory:

[code]nuget install "<MyNuGetProject>" –Source <path to private nuget repo>[/code]

e.g.

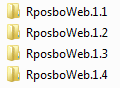

[code]nuget install "RposboWeb" –Source \\build\_packages\[/code]

This will generate a new folder for the updated package in the current directory. What’s awesome about this is it means you’ve got a history of your updates, so breaking changes notwithstanding you could rollback/update just by pointing IIS at a different folder.

Which brings me on to…

ii. Update IIS to reference the newly created folder

Using appcmd, change the folder your website references to the “content” folder within the installed nuget package:

[code]appcmd.exe set vdir "<MyWebRoot>/" /physicalpath:"<location of installed package>\content"[/code]

e.g.

[code]appcmd.exe set vdir "RposboWeb/" /physicalpath:"D:\Sites\RposboWeb 1.12\content"[/code]

So, the obvious tricky bit here is getting the name of the package that’s just been installed in order to update IIS. At first I thought I could create a powershell build step in TeamCity which takes the version as a parameter and creates an update batch file using something like the below:

[code]param([string]$version = "version")

$stream = [System.IO.StreamWriter] "c:\_NuGet\install_latest.bat"

$stream.WriteLine("nuget install \"<MyNugetProject>\" –Source <path to private nuget repo>")

$stream.WriteLine("\"%systemroot%\system32\inetsrv\appcmd.exe\" set vdir \"<MyWebRoot>/\" /physicalpath:\"<root location of installed package>" + $version + "\content\"")

$stream.Close()[/code]

However, my powershell knowledge is miniscule so this automated installation file generation isn’t working yet…

I’ll continue working on both this installation file generation version and also a powershell version that uses IIS7 administration provider instead of appcmd.

In conclusion

- change some code – done (1)

- commit to local git – done (1)

- push to remote repo – done (1)

- TeamCity picks it up, builds, runs tests, etc – done (2)

- creates a nuget package – done (2)

- deploys to a private nuget repo – done (2)

- subscribed endpoint servers pick up/are informed of the updated package – done/in progress (3)

- endpoint servers install the updated package – done/in progress (3)

Any help on expanding my knowledge gaps is appreciated – please leave a comment or tweet me! I’ll add another post in this series as I progress.

References

NuGet private feeds

http://haacked.com/archive/2010/10/21/hosting-your-own-local-and-remote-nupack-feeds.aspx

http://blog.davidebbo.com/2011/04/easy-way-to-publish-nuget-packages-with.html

TeamCity nuget support

http://blogs.jetbrains.com/dotnet/2011/08/native-nuget-support-in-teamcity/

Nuget command line reference

http://docs.nuget.org/ | http://docs.nuget.org/docs/reference/command-line-reference

IIS7 & Powershell

http://blogs.iis.net/thomad/archive/2008/04/14/iis-7-0-powershell-provider-tech-preview-1.aspx

http://learn.iis.net/page.aspx/447/managing-iis-with-the-iis-70-powershell-snap-in/

http://technet.microsoft.com/en-us/library/ee909471(WS.10).aspx

* to steal a nice quote from Scott Hanselman