In my previous articles I took you through the process of setting up your own private WebPageTest, either via the AWS interface, or via Terraform infrastructure as code).

By default, this would create a Private WebPageTest instance that uses the latest code on the release branch of the official WPOFoundation github repo for WebPageTest.

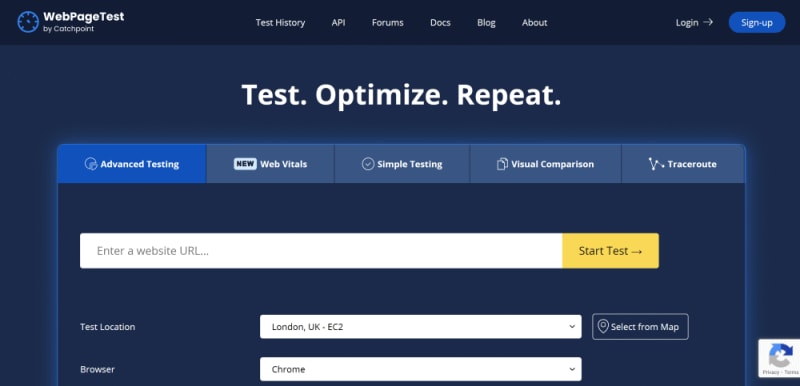

This is great if you like the newer UI (it’s not as up to date as the official WebPageTest.org site, which obviously evolves much faster), but it might not be what you want for a couple of reasons:

- You preferred the original, classic, WebPageTest UI, or

- You plan to monetise your private WebPageTest setup, which violates the

releasebranch’sLICENSE.mdentry about “Noncompete” and “Competition”

Since WebPageTest existed loooong before Catchpoint bought it up, the original version of the code (the fully open source version) still exists, and has no such non-competition concerns. It does have a LICENSE file, but that just lists all the licenses associated with the other libraries WebPageTest uses.

By the way, the same is also true for the WebPageTest agent –

masterbranch &releasebranch vsapachebranch – so bear that in mind if you’re creating a competing product. Presumably this is what Speedcurve do, for example. (Apparently so!)

In this article I’ll show you how to tweak the previous private WebPageTest installation scripts and setup processes to use the apache branch, thus reverting to the “classic” UI, and freeing you up from non-competition concerns. (if you get rich because of this article, please buy me a coffee and hire me, thanks 😁)