This article assumes you have an understanding of what Terraform is, what WebPageTest is and some AWS basics.

Have you ever wanted to have your WebPageTest setup managed as infrastructure as code, so you can keep all those carefully tuned changes and custom settings in source control, ready to confidently and repeatedly destroy and rebuild at a whim?

Sure you have.

In this article I’ll show how to script the setup of your new WPT server, installing from a base OS, and configuring customisations – all within Terraform so you can easily rebuild it with a single command.

Terrawhatnow?

Terraform is a domain specific language (DSL) which MASSIVELY simplifies AWS’s CloudFormation language. I’m not going to go into the details too much here, as I’ve done this a bit in a previous article.

Basically, you can define resources as blocks of code, which abstract the underlying API calls that can be made to do the same work. In this case I use AWS, but TF supports many other providers:

WebPageTest as Infrastructure as Code

For the rest of the article I’m building up a single terraform file, adding parts as needed. When a section isn’t currently the main focus I’ll reduce it to ellipsis, e.g.:

data "aws_ami" "ubuntu" {

...

}If you’re following along, make sure you have terraform downloaded and in your PATH. Here we go!

AWS Config

Firstly, a config section: I’m assuming you’ve set up an AWS profile on your local machine called “terraform”, and that you’ve created a terraform user in AWS with the necessary access. If not, pop over to the previous article which goes into terraform a little more.

provider "aws" {

region = "eu-west-1"

profile = "terraform"

}Ubuntu AMI

To start with we need to select the latest build of the latest supported Ubuntu OS using Terraform’s aws_ami:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

# Canonical

owners = ["099720109477"]

}I’m going to snip sections we’ve already mentioned for brevity, hence the

providersection just has dots in it..

EC2 instance

Now we can pass this into Terraform’s aws_instance:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

# or use a specific ubuntu AMI here if you like

ami = "${data.aws_ami.ubuntu.id}"

}Lets add in the instance type and the name of a keypair that we already have in AWS:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

ami = "${data.aws_ami.ubuntu.id}"

instance_type = "t2.micro"

# the name of a keypair that already exists in AWS

# so you can ssh in

key_name = "webpagetest"

}Running that so far will create a new t2.micro Ubuntu EC2 instance. Yay!

A little more security

Before we move on, let’s lock this instance down just a tad.

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

... (we'll come back to this section)

}

resource "aws_security_group" "wpt-sg" {

name = "wpt-sg"

# incoming http

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

description = "HTTP"

cidr_blocks = ["0.0.0.0/0"]

}

# ssh

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

description = "SSH"

cidr_blocks = ["${var.my_ip}/32"]

}

# outgoing access

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

variable "my_ip" {}The important part above is the line in the ssh block that limits the requests to var.my_ip; when you terraform apply you will pass in a variable -var my_ip=... with the value of your current IP address; this security group will then only allow SSH access from your current IP. The other two blocks (egress and port 80 ingress) allow for the necessary http connectivity to make it work. Notice I had to define a variable "my_ip" {} as well, so the variable is recognised.

We can lock this down much more, and I’ll go into a series of security-focussed articles shortly. Honest. 🔒

Now we can just reference that security group in the ec2 instance block:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

ami = "${data.aws_ami.ubuntu.id}"

instance_type = "t2.micro"

key_name = "webpagetest"

# referencing the security group

vpc_security_group_ids = ["${aws_security_group.wpt-sg.id}"]

}

resource "aws_security_group" "wpt-sg" {

...

}

variable "my_ip" {}If you want to test things, you’ll need to run terraform apply -var my_ip=<value of your IP>; don’t know your current IP? Head over to https://checkip.amazonaws.com/!

Now we have an Ubuntu EC2 instance, with port 80 open to the world and port 22 (ssh) open to your IP address.

WebPageTest installation

Next up is setting it up as a WebPageTest server using the installation script; to do this we’ll use a provider block and pass in the commands to execute inline.

The main commands are apt update, apt upgrade, and apt dist-upgrade (dist-upgrades on its own can – in rare cases – miss some packages, so I always just do both) to get the base OS ready (else the WPT script fails), then the wpt script itself – the other parameters make the updates and upgrades happen without prompting for user input:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

...

key_name = "webpagetest"

provisioner "remote-exec" {

inline = [

# configure unattended update and upgrades

"sudo export DEBIAN_FRONTEND=noninteractive",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" update",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" upgrade",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" dist-upgrade",

# install wpt server

"wget -O - https://raw.githubusercontent.com/WPO-Foundation/wptserver-install/master/ubuntu.sh | bash"

]

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

}

# show the generated EC2 instance's IP address

output "public_ip" {

value = "${aws_instance.wpt.public_ip}"

}

resource "aws_security_group" "wpt-sg" {

...

}

variable "my_ip" {}You need to pass in the location on your local hard disk of the private keypair that you referenced in key_name, since that provider block will SSH in on your behalf in order to execute the commands.

I’ve added an output here as well, which means you’ll see the public IP address of your new instance when terraform finishes; just makes things easier.

Try a terraform apply -var my_ip=<your IP goes here> and after a few minutes you should be able to browse to the public IP of your EC2 instance and see the familiar WebPageTest homepage! Yay again!

WebPageTest Settings

Next up is the configuration and test agent locations. I’m going to assume that you’ve already set up your own test agents (if not, you can check the non-terraform version of this article here which covers creating test agent AMIs too).

For the settings file I’ve chosen to use a terraform template file, which I’ve saved in the same directory and called it settings.tpl. We’re going to use another provider block – this time of type file – to create a new file on the server based on the template and some variables.

The template file settings.tpl looks like this:

ec2_key=${iam_key}

ec2_secret=${iam_secret}

EC2.default=eu-west-1So we just need to pass in those 2 variables: iam_key and iam_secret:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

...

provisioner "remote-exec" {

...

}

provisioner "file" {

content = templatefile(

"settings.tpl",

{

iam_key = <tbc>

iam_secret = <tbc>

}

)

destination = "~/settings.ini"

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

}

output "public_ip" {

...

}

resource "aws_security_group" "wpt-sg" {

...

}

variable "my_ip" {}But where do we get the missing <tbc> values from? Great question! Let’s do that next and then come back:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

...

provisioner "remote-exec" {

...

}

provisioner "file" {

...

}

}

output "public_ip" {

...

}

resource "aws_security_group" "wpt-sg" {

...

}

variable "my_ip" {}

# create a user

resource "aws_iam_user" "wpt-user" {

name = "wpt-user"

}

# give that user an access key

resource "aws_iam_access_key" "wpt-user" {

user = "${aws_iam_user.wpt-user.name}"

}

# grant that user EC2 powers,

# so it can start and stop test agents

resource "aws_iam_user_policy_attachment" "ec2-policy-attach" {

user = "${aws_iam_user.wpt-user.name}"

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2FullAccess"

}Ok, cool, now we have the IAM details, let’s return to the WPT server setup:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

...

provisioner "remote-exec" {

...

}

provisioner "file" {

content = templatefile(

"settings.tpl",

{

iam_key = aws_iam_access_key.wpt-user.id

iam_secret = aws_iam_access_key.wpt-user.secret

}

)

destination = "~/settings.ini"

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

}

output "public_ip" {

...

}

resource "aws_security_group" "wpt-sg" {

...

}

variable "my_ip" {}

resource "aws_iam_user" "wpt-user" {

...

}

resource "aws_iam_access_key" "wpt-user" {

...

}

resource "aws_iam_user_policy_attachment" "ec2-policy-attach" {

...

}Nearly there! Now add in your own test agents via your own locations.ini. Create your own locations.ini file that looks like this, refering to the AMI ID from the AMI agents you have created previously:

[locations]

1=eu-west-1-group

default=eu-west-1-group

[eu-west-1-group]

1=eu-west-1

label="EU West Group"

default=eu-west-1

[eu-west-1]

browser=Chrome

label="EU West"

ami=ami-YOUR AMI GOES HERE

size=c4.large

region=eu-west-1Now it’s another provider block of type file, but this time we’re just copying it straight over to the home directory for the ubuntu user:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

...

provisioner "remote-exec" {

...

}

provisioner "file" {

...

}

provisioner "file" {

source = "locations.ini"

destination = "~/locations.ini"

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

}

output "public_ip" {

...

}

resource "aws_security_group" "wpt-sg" {

...

}

variable "my_ip" {}

resource "aws_iam_user" "wpt-user" {

...

}

resource "aws_iam_access_key" "wpt-user" {

...

}

resource "aws_iam_user_policy_attachment" "ec2-policy-attach" {

...

}Lastly we need to move those ini files from the home directory to the settings/common/ directory; the file type provider doesn’t have permission to put those files there directly, so we’ll use another remote-exec to move them:

provider "aws" {

...

}

data "aws_ami" "ubuntu" {

...

}

resource "aws_instance" "wpt" {

...

provisioner "remote-exec" {

...

}

provisioner "file" {

...

}

provisioner "file" {

...

}

# move config files to correct place

provisioner "remote-exec" {

inline = [

"sudo mkdir -p /var/www/webpagetest/www/settings/common/",

"sudo mv ~/*.ini /var/www/webpagetest/www/settings/common/"

]

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

}

output "public_ip" {

...

}

resource "aws_security_group" "wpt-sg" {

...

}

variable "my_ip" {}

resource "aws_iam_user" "wpt-user" {

...

}

resource "aws_iam_access_key" "wpt-user" {

...

}

resource "aws_iam_user_policy_attachment" "ec2-policy-attach" {

...

}Kick off a terraform apply -var my_ip=<your IP here> and you will get a WebPageTest server, using your configuration, and your own WebPageTest agent AMIs! Woohoo! 🎉

The End! 🙂

Summary

That script in full:

provider "aws" {

region = "eu-west-1"

profile = "terraform"

}

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

# Canonical

owners = ["099720109477"]

}

resource "aws_instance" "wpt" {

ami = "${data.aws_ami.ubuntu.id}"

instance_type = "t2.micro"

key_name = "webpagetest"

vpc_security_group_ids = ["${aws_security_group.wpt-sg.id}"]

provisioner "remote-exec" {

inline = [

# configure unattended update and upgrades

"sudo export DEBIAN_FRONTEND=noninteractive",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" update",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" upgrade",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" dist-upgrade",

# install wpt server

"wget -O - https://raw.githubusercontent.com/WPO-Foundation/wptserver-install/master/ubuntu.sh | bash"

]

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

provisioner "file" {

content = templatefile(

"settings.tpl",

{

iam_key = aws_iam_access_key.wpt-user.id

iam_secret = aws_iam_access_key.wpt-user.secret

}

)

destination = "~/settings.ini"

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

provisioner "file" {

source = "content/locations.ini"

destination = "~/locations.ini"

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

# move config files to correct place

provisioner "remote-exec" {

inline = [

"sudo mkdir -p /var/www/webpagetest/www/settings/common/",

"sudo mv ~/*.ini /var/www/webpagetest/www/settings/common/"

]

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = file("../your/wpt/file.pem")

}

}

}

output "public_ip" {

value = "${aws_instance.wpt.public_ip}"

}

resource "aws_security_group" "wpt-sg" {

name = "wpt-sg"

# incoming http

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

description = "HTTP"

cidr_blocks = ["0.0.0.0/0"]

}

# ssh

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

description = "SSH"

cidr_blocks = ["${var.my_ip}/32"]

}

# outgoing access

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

variable "my_ip" {}

resource "aws_iam_user" "wpt-user" {

name = "wpt-user"

}

resource "aws_iam_access_key" "wpt-user" {

user = "${aws_iam_user.wpt-user.name}"

}

resource "aws_iam_user_policy_attachment" "ec2-policy-attach" {

user = "${aws_iam_user.wpt-user.name}"

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2FullAccess"

}Extra Credit Bonuses

Now we can get on to some fun stuff that isn’t strictly necessary, but is pretty cool!

Test Archiving

This setup won’t last too long as you’ll run out of disc space. The best solution here is to set up an S3 bucket to archive the tests to:

# random string to aim for a unique bucket name

resource "random_string" "bucket" {

length = 10

special = false

upper = false

}

# S3 bucket for archives

resource "aws_s3_bucket" "wpt-archive" {

bucket = "my-wpt-test-archive-${random_string.bucket.result}"

acl = "private"

}

# Change the EC2 setup

resource "aws_instance" "wpt" {

...

# configure overrides settings.ini file

provisioner "file" {

content = templatefile(

"content/settings.tpl",

{

...

# new bit

wpt_archive = aws_s3_bucket.wpt-archive.bucket

}

)

}

}

...

Then update your settings.tpl with the necessary S3 config:

ec2_key=${iam_key}

ec2_secret=${iam_secret}

EC2.default=eu-west-1

; new stuff

archive_s3_server=s3.amazonaws.com

archive_s3_key=${iam_key}

archive_s3_secret=${iam_secret}

archive_s3_bucket=${wpt_archive}

archive_days=1

cron_archive=1You’ll need to run a terraform init to bring in thes3_archive and random_string providers.

Usually when you

terraform destroyeverything will be torn down, and sometimes aterraform applyresults in resources being destroyed and replaced; however, if the s3 bucket is not empty (i.e, you have at least one test already archived) then it will just warn that it can’t destroy it and carry on with the other stuff. Very handy!

CrUX config

If you’ve read my previous article on adding Chrome User Experience data to your WebPageTest setup I’m sure you’re wondering how to add that in here. Well, here’s how!

resource "aws_instance" "wpt" {

...

# configure overrides settings.ini file

provisioner "file" {

content = templatefile(

"content/settings.tpl",

{

...

# new bit

crux_api_key = (length(var.crux_api_key)>0 ? "crux_api_key=${var.crux_api_key}" : "")

}

)

}

}

# new variable

variable "crux_api_key" {

type = string

default = ""

description = "Chrome UX API key to pull down CrUX data"

}And update settings.tpl:

ec2_key=${iam_key}

ec2_secret=${iam_secret}

EC2.default=eu-west-1

archive_s3_server=s3.amazonaws.com

archive_s3_key=${iam_key}

archive_s3_secret=${iam_secret}

archive_s3_bucket=${wpt_archive}

archive_days=1

cron_archive=1

; new stuff

${crux_api_key}Then you can pass in your crux API key as a variable via terraform apply -var crux_api_key=<your CrUX API key here>, and if you don’t pass one in then that line is just ignored.

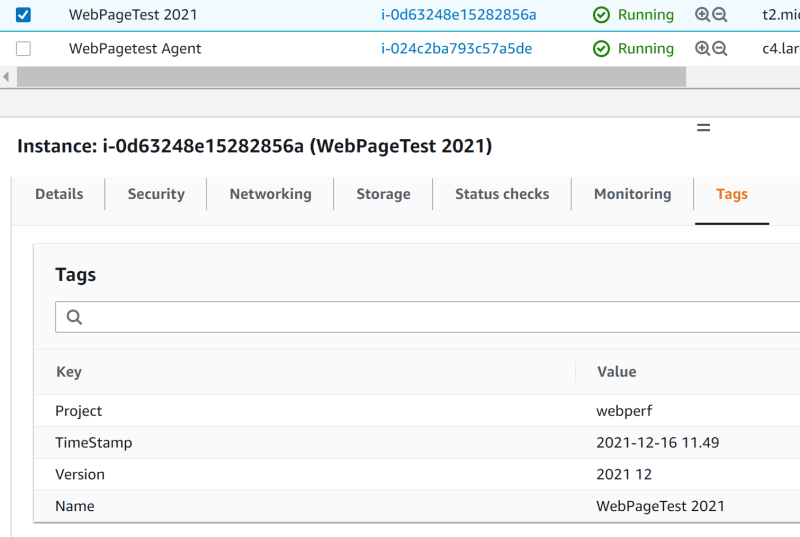

Extra bonus: AWS Tags everywhere

You can configure “default tags” which will be applied to everything in this terraform project:

provider "aws" {

region = "eu-west-1"

profile = "terraform"

# new bit!

default_tags {

tags = {

Name = "WebPageTest ${formatdate("YYYY",timestamp())}"

Project = "webperf"

Version = formatdate("YYYY MM",timestamp())

TimeStamp = formatdate("YYYY-MM-DD hh.mm",timestamp())

}

}

}

BEWARE! I’ve found the use of formatdate/ variables in default tags don’t always work. Even with the same version of terraform on different projects. There’s an issue logged, but maybe just don’t use variables and set your own values explicitly. That said, the above works fine in this project. For me. 🙂

AWS Tags really everywhere

You can pass in AWS tags to your WPT agents too. You can set these explicitly via settings.ini, so let’s update our settings.tpl:

ec2_key=${iam_key}

ec2_secret=${iam_secret}

EC2.default=eu-west-1

archive_s3_server=s3.amazonaws.com

archive_s3_key=${iam_key}

archive_s3_secret=${iam_secret}

archive_s3_bucket=${wpt_archive}

archive_days=1

cron_archive=1

${crux_api_key}

; new stuff

EC2.tags="Project=>WebPageTest|Author=>rposbo"Now each wpt agent will get the tags Project and Author with the values “WebPageTest” and “rposbo” respectively.

Seems a bit too easy though, right? Let’s upgrade by passing these in as a variable:

ec2_key=${iam_key}

ec2_secret=${iam_secret}

EC2.default=eu-west-1

archive_s3_server=s3.amazonaws.com

archive_s3_key=${iam_key}

archive_s3_secret=${iam_secret}

archive_s3_bucket=${wpt_archive}

archive_days=1

cron_archive=1

${crux_api_key}

; changed stuff

EC2.tags="${agent_tags}"Then pass it in from the EC2 setup:

resource "aws_instance" "wpt" {

...

# configure overrides settings.ini file

provisioner "file" {

content = templatefile(

"content/settings.tpl",

{

...

# new bit

agent_tags = "Project=>WebPageTest|Author=>rposbo"

}

)

}

}Ok that hasn’t really changed things much – now for a little bit of terraform magic that took me a while to figure out; passing the default tags through to the agents!

resource "aws_instance" "wpt" {

...

# configure overrides settings.ini file

provisioner "file" {

content = templatefile(

"content/settings.tpl",

{

...

# new bit

agent_tags = join("|", [for k, v in self.tags_all : "${k}=>${v}" if k != "Name"])

}

)

}

}I ignore the Name tag, as this is set to “WebPageTest Agent” automatically anyway, but other than that I’m transforming the default_tags (which is merged with any other tags on this resource and passed as self.tags_all to the provisioner block) into an array of key=>value, and then joining them with | and passing in to the variable – cool, huh?

See it in action:

DRY keypairs

(Don’t Repeat Yourself)

Notice how we keep repeating the keypair info for the file and remote-exec blocks?

private_key = file("../your/wpt/file.pem")

We can put that in a local variable so we only need to change it once if necessary:

locals {

keypair_content = file("../your/wpt/file.pem")

}

resource "aws_instance" "wpt" {

...

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = "${local.keypair_content}"

}

}Or even better, have it as a variable:

locals {

keypair_content = file("${var.keypair_location}")

}

resource "aws_instance" "wpt" {

...

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = "${local.keypair_content}"

}

}

variable "keypair_location" {

type = string

description = "location on local disk of your keypair file"

}Final Post-Credits Summary With All The New Cool Stuff Too

Here’s the full updated script:

provider "aws" {

region = "eu-west-1"

profile = "terraform"

default_tags {

tags = {

Name = "WebPageTest ${formatdate("YYYY",timestamp())}"

Project = "webperf"

Version = formatdate("YYYY MM",timestamp())

TimeStamp = formatdate("YYYY-MM-DD hh.mm",timestamp())

}

}

}

locals {

keypair_content = file("${var.keypair_location}")

}

# random string to aim for a unique bucket name

resource "random_string" "bucket" {

length = 10

special = false

upper = false

}

# S3 bucket for archives

resource "aws_s3_bucket" "wpt-archive" {

bucket = "my-wpt-test-archive-${random_string.bucket.result}"

acl = "private"

}

data "aws_ami" "ubuntu" {

most_recent = true

filter {

name = "name"

values = ["ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-*"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

# Canonical

owners = ["099720109477"]

}

resource "aws_instance" "wpt" {

ami = "${data.aws_ami.ubuntu.id}"

instance_type = "t2.micro"

key_name = "wpt"

vpc_security_group_ids = ["${aws_security_group.wpt-sg.id}"]

provisioner "remote-exec" {

inline = [

# configure unattended update and upgrades

"sudo export DEBIAN_FRONTEND=noninteractive",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" update",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" upgrade",

"sudo apt -yq -o Dpkg::Options::=\"--force-confdef\" -o Dpkg::Options::=\"--force-confold\" dist-upgrade",

# install wpt server

"wget -O - https://raw.githubusercontent.com/WPO-Foundation/wptserver-install/master/ubuntu.sh | bash"

]

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = "${local.keypair_content}"

}

}

provisioner "file" {

content = templatefile(

"settings.tpl",

{

iam_key = aws_iam_access_key.wpt-user.id

iam_secret = aws_iam_access_key.wpt-user.secret

wpt_archive = aws_s3_bucket.wpt-archive.bucket

crux_api_key = (length(var.crux_api_key)>0 ? "crux_api_key=${var.crux_api_key}" : "")

agent_tags = join("|", [for k, v in self.tags_all : "${k}=>${v}" if k != "Name"])

}

)

destination = "~/settings.ini"

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = "${local.keypair_content}"

}

}

provisioner "file" {

source = "locations.ini"

destination = "~/locations.ini"

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = "${local.keypair_content}"

}

}

# move config files to correct place

provisioner "remote-exec" {

inline = [

"sudo mkdir -p /var/www/webpagetest/www/settings/common/",

"sudo mv ~/*.ini /var/www/webpagetest/www/settings/common/"

]

connection {

host = "${self.public_ip}"

type = "ssh"

user = "ubuntu"

private_key = "${local.keypair_content}"

}

}

}

resource "aws_security_group" "wpt-sg" {

name = "wpt-sg"

# incoming http

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

description = "HTTP"

cidr_blocks = ["0.0.0.0/0"]

}

# ssh

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

description = "SSH"

cidr_blocks = ["${var.my_ip}/32"]

}

# outgoing access

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

variable "my_ip" {}

variable "keypair_location" {

type = string

description = "location on local disk of your keypair file"

}

variable "crux_api_key" {

type = string

default = ""

description = "Chrome UX API key to pull down CrUX data"

}

resource "aws_iam_user" "wpt-user" {

name = "wpt-user"

}

resource "aws_iam_access_key" "wpt-user" {

user = "${aws_iam_user.wpt-user.name}"

}

resource "aws_iam_user_policy_attachment" "ec2-policy-attach" {

user = "${aws_iam_user.wpt-user.name}"

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2FullAccess"

}

output "public_ip" {

value = "${aws_instance.wpt.public_ip}"

}Have a go, let me know what you think.