Previously I’ve had some difficulty backing up my microistance WordPress MySql db; running mysqldump would cause 100% CPU use until I rebooted the instance and restart apache & mysql.

Why was mysqldump evil?

At least I’ve finally discovered the reason I’d get those nasty CPU spikes:

![w2db_fail[1] w2db_fail[1]](https://res.cloudinary.com/otomotech/image/upload/f_auto,q_auto/robinosborne/2013/05/w2db_fail1.png)

The culprit is, most likely, the Slimstat plugin:

![screenshot-2[1] screenshot-2[1]](https://res.cloudinary.com/otomotech/image/upload/f_auto,q_auto/robinosborne/2013/05/screenshot21.png)

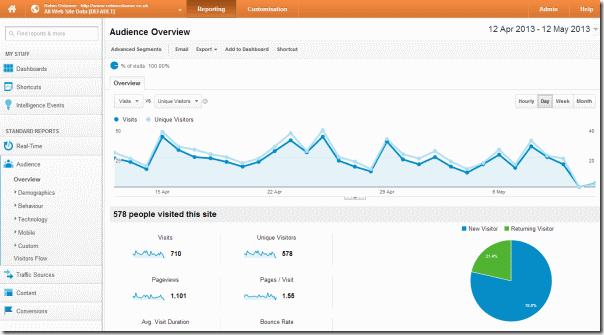

This is a real-time web analytics plugin which has a lovely dashboard view showing you everything about who is or has viewed your site, how long they were there for, how they got there, etc. Addictive stuff.

However, in order to get these stats it saves a lot of data in a new set of mysql tables; these tables not only get really big, but are also being accessed constantly.

As such, a brief overview for backing up from the command line, as referenced in the WordPress codex has a couple of mysqldump commands similar to:

[code]mysqldump –add-drop-table -h <hostname> -u <username> -p <database name>[/code]

This will try to backup everything in the named database, which would include all Slimstat tables.

Solutions

There are a couple of approaches to this. I’ve now done both.

- Change your backup script to select the wordpress mysql tables only

- Use google analytics instead of slimstat

Using google analytics

Head over to www.google.co.uk/analytics and setup your account, then save the file it gives you onto your web host to prove it’s you. Now you can get lovely stats just by placing a tracking script reference in the footer of your WordPress theme; be careful to reimplement this if you change themes.

Selecting the WordPress tables

Get the table names for all wordpress tables (assuming you went with the default naming convention of a “wp_” prefix; if not, adapt to suit your convention):

mysql -u<username> -p<password> –skip-column-names -e "select table_name from information_schema.TABLES where TABLE_NAME like ‘wp_%’;"

Pipe it into mysqldump:

mysql -u<username> -p<password> –skip-column-names -e "select table_name from information_schema.TABLES where TABLE_NAME like ‘wp_%’;" | xargs mysqldump –add-drop-table -u<username> -p<password> <database name>

send that output to a file:

mysql -u<username> -p<password> –skip-column-names -e "select table_name from information_schema.TABLES where TABLE_NAME like ‘wp_%’;" | xargs mysqldump –add-drop-table -u<username> -p<password> <database name> > <backup file name>

In summary

Using this info I can now create a backup script that actually works; it will generate a single gzipped tar of all my website’s content files (including wordpress code) and the database backup:

[code]#!/bin/bash

# Set the date format, filename and the directories where your backup files will be placed and which directory will be archived.

NOW=$(date +"%Y-%m-%d-%H%M")

FILE="mywebsitebackup.$NOW.tar"

BACKUP_DIR="/home/ec2-user/_backup"

WWW_DIR="/var/www"

# MySQL database credentials

DB_USER="root"

DB_PASS="enteryourpwdhere"

DB_NAME="wordpress"

DB_FILE="mydbbackup.$NOW.sql"

# dump the wordpress dbs

mysql -u$DB_USER -p$DB_PASS –skip-column-names -e "select table_name from information_schema.TABLES where TABLE_NAME like ‘wp_%’;" | xargs mysqldump –add-drop-table -u$DB_USER -p$DB_PASS $DB_NAME > $BACKUP_DIR/$DB_FILE

# archive the website files

tar -cvf $BACKUP_DIR/$FILE $WWW_DIR

# append the db backup to the archive

tar –append –file=$BACKUP_DIR/$FILE $BACKUP_DIR/$DB_FILE

# remove the db backup

rm $BACKUP_DIR/$DB_FILE

# compress the archive

gzip -9 $BACKUP_DIR/$FILE

[/code]

Save that in a script, make it executable, and if you like you can add a cron task to create a backup on a regular basis. A couple of things to watch out for:

- These files can be big, so will use up a good chunk of your elastic block store volumes, which cost a few dollars more than a teeny EC2 instance

- Creating the archive can be a bit processor intensive sometimes, which may stress your poor little microinstance

Coming up

Using this backup I automate the creation of a duplicate blog and script the entire process using Chef and Vagrant!